Asymptotic Notation

Asymptotic notation are formal notational methods for stating the upper and lower bounds of a function. They are the notations used when describing resource needs. These are: (Pronounced, Big-O, Little-O, Omega and Theta respectively). The f(n) inside each of these notations is a description of a curve like we saw in the previous section. They describe the shape of a line.

Formally

" is " iff for some constants and , for all

" is " iff for some constants and , for all

" is " iff is and is

" is " iff is and is NOT

iff is the mathematical shorthand for "if and only if"

Informally

" is " basically means that the function describes the upper bound for

" is " basically means that the function describes the lower bound for

" is " basically means that the function describes the exact bound for

" is " basically means that the function describes the upper bound for where will never actually be reached

Worst case, Best Case, Average Case

The asymptotic notation system for bounds is often confused with the idea of worst case, best case and average case. They are actually very different things. Big-O does not describe a worst case, Omega does not describe a best case. Big-O describes an upper bound on each of these cases. Similarly Omega describes a lower bound on each of these cases. We should not mix up this idea.

In practice, most of the time we deal with either worst case or average case. We rarely ever consider best case. Each case can still have an upper bound(big-O) or lower bound (Omega).

An easy way to think about Asymptotic Notation

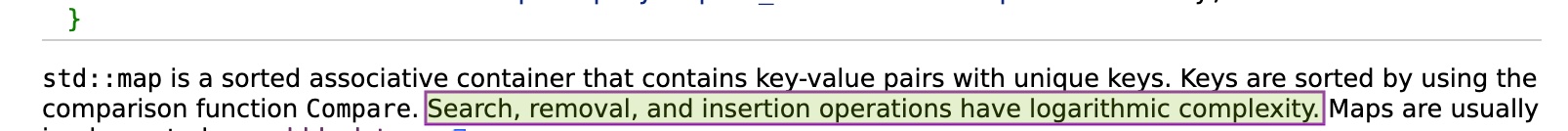

The math in algorithms analysis can be intimidating. One of the simplest ways to think about algorithms analysis is that it is simply a way to apply a rating system for your algorithms. It is like a audience rating for movies (G, PG, AA etc.). In movies these ratings tell you whether or not the movie was intended to for audieces of varying ages without you having to watch the movie first. Similarly the resource consumption functions tells you the expected resource consumption rate without you having to look at the actual code. It tells you the kind of resource needs you can expect the algorithm to exhibit as your data gets bigger and bigger. From lowest resource growth to highest resource growth, the rankings are: , , , , , , . References will sometimes state the expected resource consumption. For example, here is a screenshot of the reference page on c++ maps:

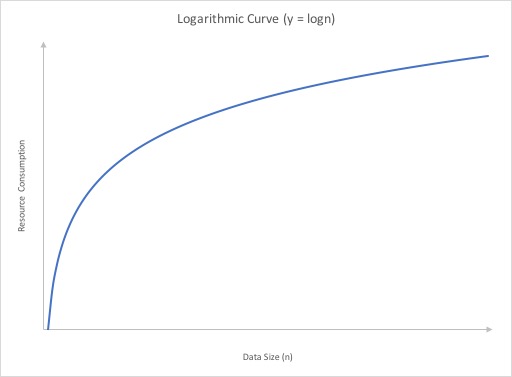

Notice the highlighted section in the above image. "...Search, removal, and insertion operations have logarithmic complexity....". This statement means the as you add more and more data to a map, the amount of time it takes to perform search/remove/insert will grow in a logarithmic manner. That is, it will take a doubling of the amount of data stored to increase the resource consumption by 1. Effectively if we were to somehow calculate the time it takes to do these operations as data gets added to the map, we will find that it will grow like the curve below:

Think about the graphs in the grow rate section. The way each curve looks. Recognizing the impact that this will have is the most important thing to learn about analyzing. That is the most important thing to understand about algorithms analysis.

A closer look at the definition of big-O

Mathematical definitions are typically exact. If you say that a < b then a is always smaller than b... there isn't any room for anything else. What asymptotic notations used in algorithms analysis do is relax this exactness. It allows us to get an idea of resource usage without having to be completely exact about it.

Let's take a closer look a the formal definition for big-O analysis

" is " iff for some constants and , for all

Start with the first portion of the statement

" is iff"

The above is portion is stating what we are defining. In other words, that part says: In order for to be it must meet the criteria set out in the rest of the definition. It also gives you an idea of what pieces are involved with the definition

- n is the size of the data set.

- T(n) is a function that describes the usage of a resource with respect to n

- f(n) is a function that describes a curve..., , , etc.

- O(f(n)) means that the curve described by f(n) is an upper bound for the resource needs of a function T(n)

The next part of the definition:

... for some constants and ,...

and refer to two values that are both constants. constants are values that do not change with respect to n. No matter what n is, and must stay the same. The exact values of and are not defined, but in order for the definition to be true, we must be able to find a value for each of these constants such that the rest of the statement is true. Note that it doesn't matter what they are.. you can pick any value you want for these constants... as long as the statement holds using them, you are fine.

......

This means that if we were to plot on a graph, the curve would fall underneath some stretched (scaled by a constant) version of the curve. What this means is that does not have to be under the specific curve described by . It is allowed to fall under a constantly scaled version of the . For example instead of having to be under an exact curve like , it can be under the curve or even the curve. In fact it can be any constant, as long as it is a constant. A constant is simply a number that does not change with . So as gets bigger, you cannot change what the value for . The actual value of the constant does not matter.

The last portion of the definition is:

... for all

This is another relaxation of the mathematical definition. It means part of the curve is allowed to actually be above the curve. simply fall under the curve at some value of (we call this point ). and once it falls under the curve, it cannot go back above the curve for any value of n greater than

In summary, when we are looking at big-O, we are in essence looking for a description of the growth rate of the resource increase. The exact numbers do not actually matter in the end.